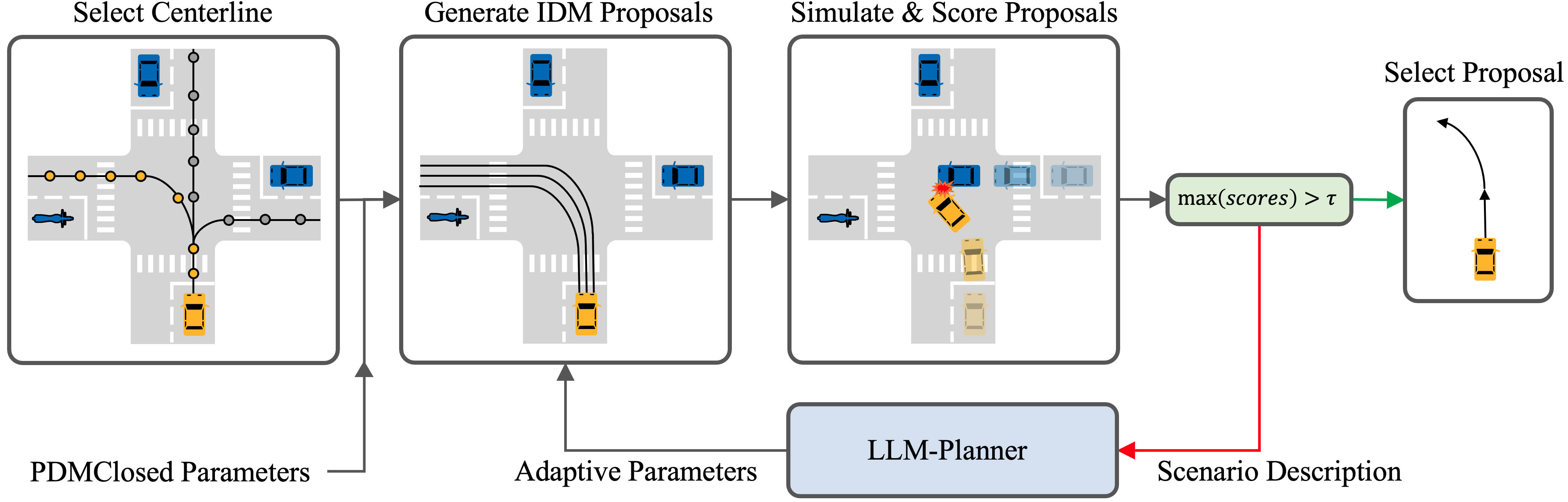

LLM-Assist is a novel hybrid planning approach that synergizes a rule-based planner (PDM-Closed) and an LLM-based planner to tackle challenging high uncertainty autonomous driving scenarios

Abstract

Although planning is a crucial component of the autonomous driving stack, researchers have yet to develop robust planning algorithms that are capable of safely handling the diverse range of possible driving scenarios. Learning-based planners suffer from overfitting and poor long-tail performance. On the other hand, rule-based planners generalize well, but might fail to handle scenarios that require complex driving maneuvers.

To address these limitations, we investigate the possibility of leveraging the common-sense reasoning capabilities of Large Language Models (LLMs) such as GPT4 and Llama2 to generate plans for self-driving vehicles. In particular, we develop a novel hybrid planner that leverages a conventional rule-based planner in conjunction with an LLM-based planner.

Guided by commonsense reasoning abilities of LLMs, our approach navigates complex scenarios which existing planners struggle with, produces well-reasoned outputs while also remaining grounded through working alongside the rule-based approach. Through extensive evaluation on the nuPlan benchmark, we achieve state-of-the-art performance, outperforming all existing pure learning- and rule-based methods across most metrics.

Video

Metrics

LLM-Assist evaluated on nuPlan Closed-Loop Challenges on Val14 split. GPT-3-AssistPAR achieves SoTA performance on almost all metrics on both closed-loop challenges, reducing the number of dangerous driving scenarios by 11%.

BibTeX

@article{sharan2023llm,

title={LLM-Assist: Enhancing Closed-Loop Planning with Language-Based Reasoning},

author={Sharan, SP and Pittaluga, Francesco and Kumar B G, Vijay and Chandraker, Manmohan},

journal={arXiv preprint arXiv:2401.00125},

year={2023}

}